We Are Hiring!

Internships / Stages

Internships available in the Inuit team for 2026 / Stages disponibles dans l’équipe Inuit pour 2026

PhD and postdoc

PhD and postdoc positions available in the INUIT team for 2024

Biography

I am Associate Professor at IMT Atlantique. I am a member of the Inuit team from the Lab-Sticc lab.

In nov. 2020, I defended my PhD thesis, entitled “Toward a Characterization of Perceptual Biases in Mixed Reality: A Study of Factors Inducing Distance Misperception”, conducted in cooperation between Centrale Nantes, the Inria Hybrid team as well as the AAU Crenau, and supervised by Guillaume Moreau, Ferran Argelaguet and Jean-Marie Normand.

Before this, I obtained an engineering degree at Centrale Nantes in 2017.

My current research interests include human perception issues in Virtual and Augmented Reality, spatial perception in virtual and augmented environments, and more generally, the effect of perceptual biases in mixed environments.

- Augmented Reality

- Virtual Reality

- Cognition & Perception

- Human Factors / HCI

PhD in Virtual and Augmented Reality, 2020

Inria Rennes - Hybrid / UMR AAU Crenau – Centrale Nantes

'Diplôme d’Ingénieur' (equivalent to MSc), 2017

Centrale Nantes

Main Projects

Projects

Featured Publications

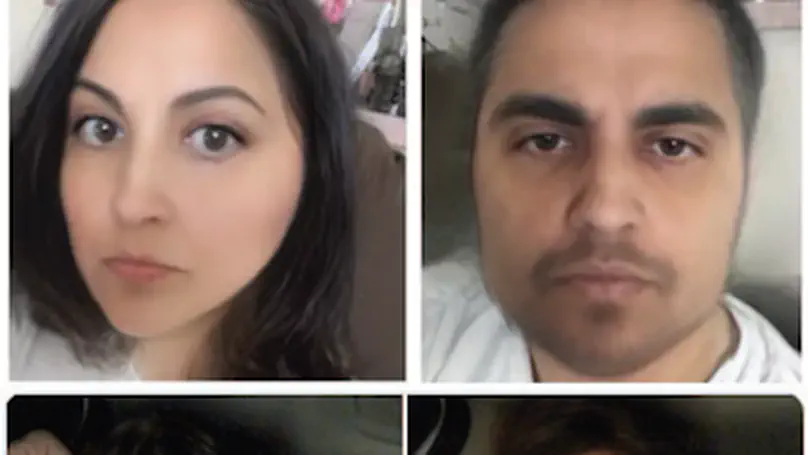

The main use of Augmented Reality (AR) today for the general public is in applications for smartphones. In particular, social network applications allow the use of many AR filters, modifying users’ environments but also their own image. These AR filters are increasingly and frequently being used and can distort in many ways users’ facial traits. Yet, we still do not know clearly how users perceive their faces augmented by these filters. In this paper, we present a study that aims to evaluate the impact of different filters, modifying several facial features such as the size or position of the eyes, the shape of the face or the orientation of the eyebrows, or adding virtual content such as virtual glasses. These filters are evaluated via a self-evaluation questionnaire, asking the participants about the personality, emotion, appeal and intelligence traits that their distorted face conveys. Our results show relative effects between the different filters in line with previous results regarding the perception of others. However, they also reveal specific effects on self-perception, showing, inter alia, that facial deformation decreases participants’ credence towards their image. The findings of this study covering multiple factors allow us to highlight the impact of face deformation on user perception but also the specificity related to this use in AR, paving the way for new works focusing on the psychological impact of such filters.

Commonly used Head Mounted Displays (HMDs) in Augmented Reality (AR), namely Optical See-Through (OST) displays, suffer from a main drawback: their focal lenses can only provide a fixed focal distance. Such a limitation is suspected to be one of the main factors for distance misperception in AR. In this paper, we studied the use of an emerging new kind of AR display to tackle such perception issues: Retinal Projection Displays (RPDs). With RPDs, virtual images have no focal distance and the AR content is always in focus. We conducted the first reported experiment evaluating egocentric distance perception of observers using Retinal Projection Displays. We compared the precision and accuracy of the depth estimation between real and virtual targets, displayed by either OST HMDs or RPDs. Interestingly, our results show that RPDs provide depth estimates in AR closer to real ones compared to OST HMDs. Indeed, the use of an OST device was found to lead to an overestimation of the perceived distance by 16%, whereas the distance overestimation bias dropped to 4% with RPDs. Besides, the task was reported with the same level of difficulty and no difference in precision. As such, our results shed the first light on retinal projection displays’ benefits in terms of user’s perception in Augmented Reality, suggesting that RPD is a promising technology for AR applications in which an accurate distance perception is required.

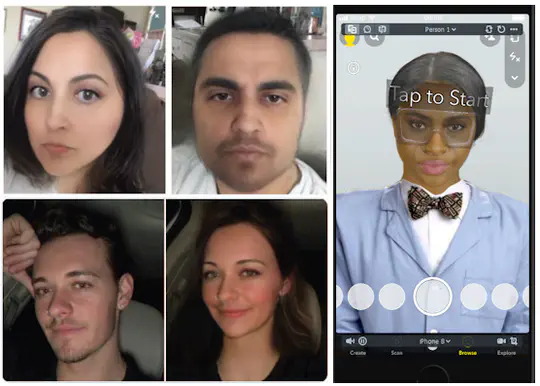

Augmented Reality (AR) filters, such as those used by social media platforms like Snapchat and Instagram, are perhaps the most commonly used AR technology. As with fully immersive Virtual Reality (VR) systems, individuals can use AR to embody different people. This experience in VR has been able to influence real world biases such as sexism. However, there is little to no comparative research on AR embodiment’s impact on societal biases. This study aims to set groundwork by examining possible connections between using gender changing Snapchat AR face filters and a person’s predicted implicit and explicit gender biases. We discovered that participants who experienced identification with gender manipulated versions of themselves showed both greater and lesser amounts of bias against men and women. These results depended the user’s gender, the filter applied, and the level of identification users reported with their AR manipulated selves. The results were similar to past VR findings but offered unique AR observations that could be useful for future bias intervention efforts.

Recent Publications

Contact

- etienne.peillard@imt-atlantique.fr

- +33 2 29 00 10 19

- Technopôle Brest-Iroise CS 83818, Brest Cedex 3, 29238

- From main entrance, go to the main hall and then follow “Building D3 - Département informatique” signs, to office D3-107 on Floor 1.

- Monday to Friday 9:00 to 18:30

- DM me on Twitter

- DM me on LinkedIn